Now, with just an ordinary flat photo, you can turn it into a three-dimensional world that you can walk into and view at will on the Apple device you hold. This may sound like something out of a science fiction movie, but a new app called Splat has made it a reality on Apple's Vision Pro.

Application debut and core functions

This app, created by Portuguese developer Rob, was recently released for free on the Apple App Store. Its core technology is derived from the SHARP artificial intelligence model released by Apple itself as an open source. The user's operation process is extremely simple and straightforward, and there is almost no learning cost.

You only need to open the Splat application on Vision Pro, select a photo from your own photo library, and then the application will start the processing process. After about 20 seconds, the originally static two-dimensional image will undergo a wonderful transformation.

Comparison with system native functions

Apple’s visionOS 2.6 system update for Vision Pro last fall actually included a feature called “space photos.” This feature also allows photos to be presented in a three-dimensional form. However, what it generates is a "volume photo", and the range that the user can move and observe in the virtual space is greatly limited.

You can think of it as a three-dimensional form that is fixed in place and can only be viewed from a limited perspective around it. In comparison, Splat generates a truly meaningful three-dimensional scene. This means that users can not only rotate to see, but also literally "walk" into the picture, exploring different parts of the scene from the sides and back.

Actual experience and effect details

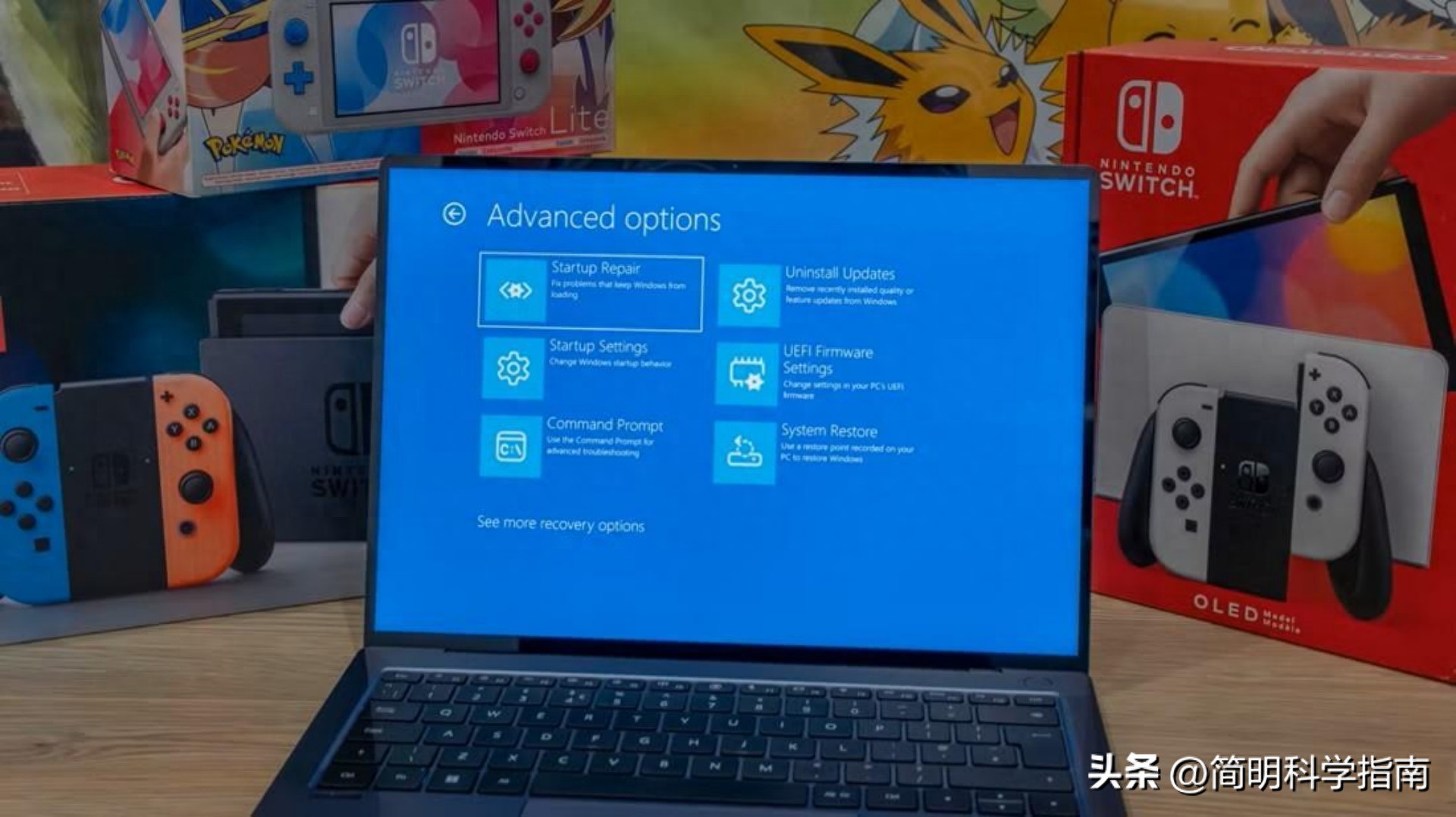

According to actual tests conducted by foreign media on Vision Pro devices equipped with M5 chips, Splat's processing speed is about 20 seconds. However, the "space photo" function of the system itself is completed almost instantly. Part of the reason for the delay is that the SHARP model needs to perform complex calculations, and it may also be related to the optimization level of the application itself.

In terms of build quality, Splat still has some perceptible shortcomings at the moment. Once the angle of view deviates from the shooting angle of the original photo, some parts of the picture will gradually become blurred, and details at the edges will also be lost. This reflects the challenges currently faced by real-time 3D reconstruction technology on the device side.

The key technological breakthroughs behind

The greatest value of applying the SHARP model that Splat relies on is that it can run complex 3D reconstruction tasks on consumer-grade devices. In the past, AI technology like this generally relied on high-performance servers in the cloud, and it might take several minutes to process a picture.

Sharp's model is optimized to efficiently utilize the device's own central processing unit, as well as hardware acceleration methods such as Apple's metal technology or Nvidia's Unified Compute Device Architecture. On most modern hardware, core processing time has been reduced to less than a second. This significantly reduces the technical and cost thresholds for high-quality 3D scene generation.

Open source strategy and industry impact

Apple adopted a rare open source strategy this time and published the SHARP model code completely on GitHub. Any developer or research institution can download, use and even improve it at will. Such actions may accelerate the comprehensive promotion and application innovation of related technologies in the industry.

The 3D scene generated by SHARP uses a mainstream technology called "Gaussian sputtering", which constructs the scene by arranging millions of tiny, translucent colored spots in a massive three-dimensional space, thereby achieving the effect of smooth rendering from any angle. The final output is a universal .ply format 3D file.

Future prospects and practical applications

This technology has a wide range of application scenarios in the future. It will be used for virtual house inspections in real estate and e-commerce, and it will be used for virtual field trips in education. Even individual users can use it to gain new experiences when they want to relive travel memories or take family photos. This gives everyone the possibility to become the creator of their own world.

The low-cost 3D reconstruction technology path has been opened. This technology path is on the device side, which means that in the future we may be able to use our own mobile phones or glasses to record complete three-dimensional spatial information with just one shot. This will not only change the way we record the world, but may also give rise to new content forms and interaction modes.

After reading this article, which old photo on your phone do you most want to use this technology to turn into a 3D scene that you can walk into? Welcome to share your thoughts in the comment area. If you think this article is valuable, don’t forget to like and forward it!