A controversial incident surrounding open source code has unexpectedly brought the standardization of a protocol that has been a hero behind the scenes in the development of AI tools into the spotlight.

The core value of protocol standardization

For the field of software development, the delineated protocol standards clarify the rules for dialogue between different systems. If there is no unified standard, each tool will have to customize the development interface for different platforms, which will lead to a huge waste of resources. The emergence of the MCP protocol is precisely to solve the chaotic situation when the AI model calls external tools. It formulates a unified communication standard for the model to access data sources and APIs.

In the past, developers had to work by repeatedly writing adaptation codes for different large model platforms. What is the current situation? They only need to carry out development work in accordance with the MCP protocol. What are the implications of this situation, that is to say, tools developed for one platform can be used on another platform without any hindrance. What is the current situation like? – Another platform that is also suitable and supports MCP is running on it. This so-called "write once, run anywhere" has its own strength and advantages, which greatly improves development efficiency and lowers the threshold for technical requirements!

From "each working independently" to "unified dispatch"

Before MCP, the way in which large AI models call external tools was decentralized and incompatible. Mainstream manufacturers such as OpenAI have defined their own dedicated tool calling protocols. This is like different electrical appliance brands using mutually incompatible plugs. Users have to prepare dedicated sockets for each appliance, which is extremely inconvenient. Developers have to maintain a separate set of tool calling logic for each AI platform.

The MCP protocol plays the role of a "universal socket". It abstracts tool calling functions into a set of standardized interfaces and message formats. Any AI model that supports the MCP protocol can directly use all the tools and services in the ecosystem that comply with the protocol just like plugging into a standard socket, without the need for additional adaptation work.

How MCP actually works

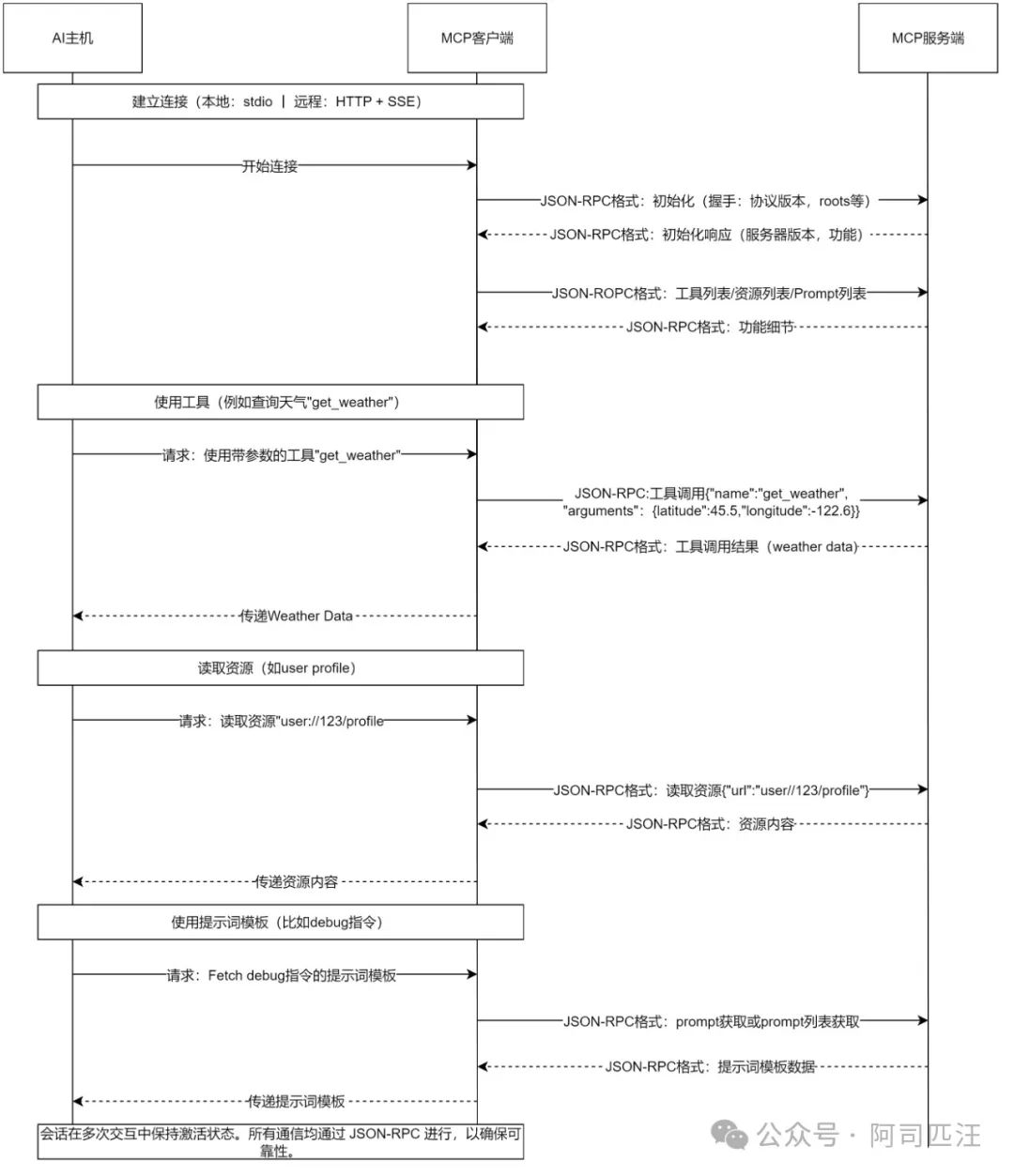

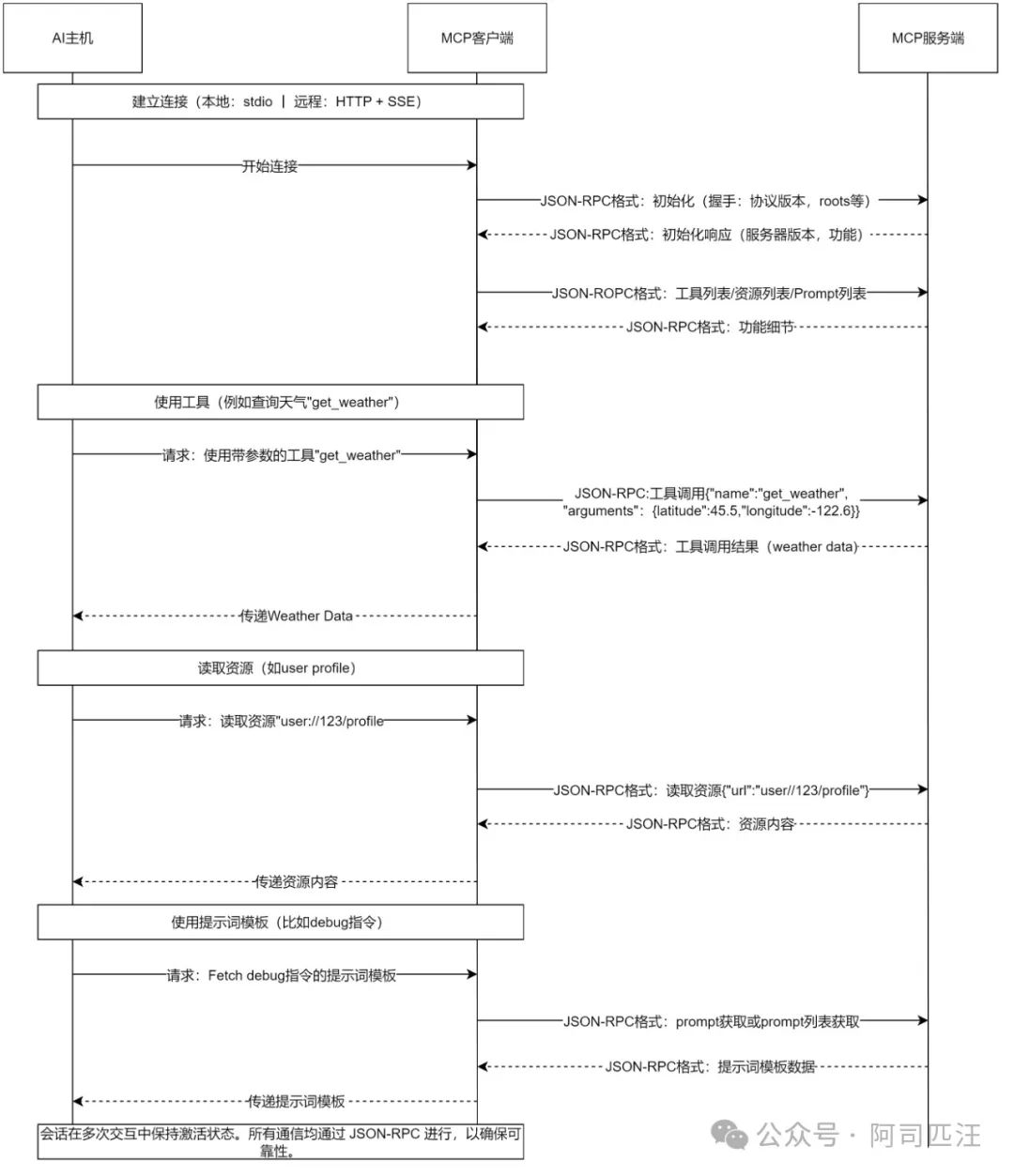

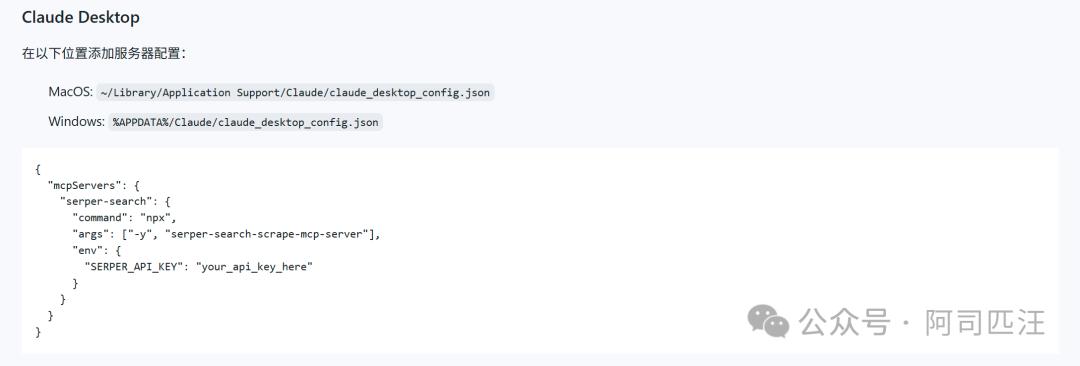

The MCP architecture is based on the classic client-server model, in which the AI model serves as the client. When it needs to perform a certain task, such as querying the weather, it will analyze the demand and generate a structured request, which is sent via the MCP client in accordance with the message format defined by the protocol.

The request is sent to the MCP server. Various available tools are registered on the server, such as the weather query API. After the server receives the request, it will locate and call the corresponding tool to perform operations, such as visiting the weather service website to obtain data. Finally, the server encapsulates the obtained results properly according to the MCP protocol and delivers them to the client of the AI model.

Compare other technical solutions

Before the emergence of MCP, there was a common solution, which was a proprietary capability similar to Function Calling. This capability allowed the model to call external tools through structured requests. However, such solutions were generally private protocols defined by major model manufacturers. They did not interoperate with each other. Developers had to adapt individually to each manufacturer's private protocol.

MCP is committed to becoming an open industry standard. It is not as closed as a manufacturer's private agreement, but discloses detailed specifications. Any organization or individual can develop compatible servers or clients based on this specification. This openness breaks down technical barriers, promotes the prosperity of the tool ecosystem, and prevents developers from being limited to a single platform.

Promote the democratization of AI application development

The significant impact of protocol standardization is that it reduces development complexity. Developers no longer need to delve into the private interface details of each AI model. They only need to learn and master the MCP set of standards to make the tools they create widely compatible, which saves a lot of time and cost in learning and adaptation.

It is this low-threshold situation that allows more individual developers and small teams to participate in the construction of AI applications more easily. In this case, they can focus exclusively on creating valuable tools themselves, but do not need to worry about compatibility issues. From a long-term perspective, this is helpful to stimulate the innovation vitality of the entire ecosystem. Through such assistance, more complex and diverse AI tools with more practical value can be spawned.

The Catalytic Effect of Open Source Events

Not long ago, Manus's rapid forgery of open source projects triggered heated discussions in the community. Although this incident was quite controversial, it unexpectedly made the entire industry more deeply aware of the importance of protocol and interface standardization. When code can be quickly reused, a clear and open protocol standard becomes the infrastructure to ensure collaboration and innovation.

This discussion prompted more development teams to seriously examine and evaluate standardized solutions such as MCP. Everyone realized that building and following a good public standard can promote the long-term healthy development of the ecosystem to a greater extent than maintaining private solutions. This incident objectively accelerated the industry's consensus on the standardization of tool calling protocols.

Do you think that as the AI tool ecosystem develops, is the collaborative spirit of the open source community more important, or is a powerful unified technical standard more decisive? Feel free to share your opinions in the comment area, and please support this article by giving a like.