As the needs of enterprises for data analysis are increasing day by day, the performance and cost limitations of traditional centralized tools are becoming more and more obvious. Finding more powerful and cost-effective domestic alternatives has become an urgent need for many enterprises.

Inherent Limitations of Centralized Tools

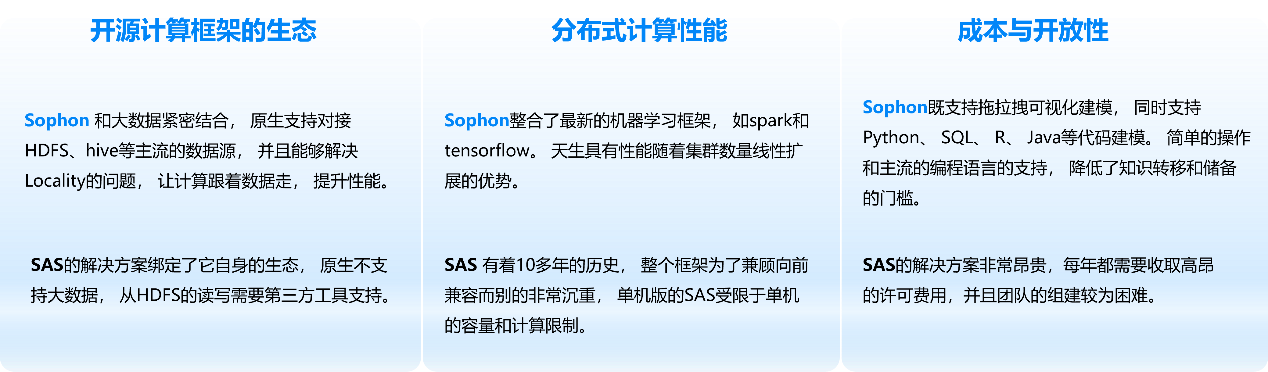

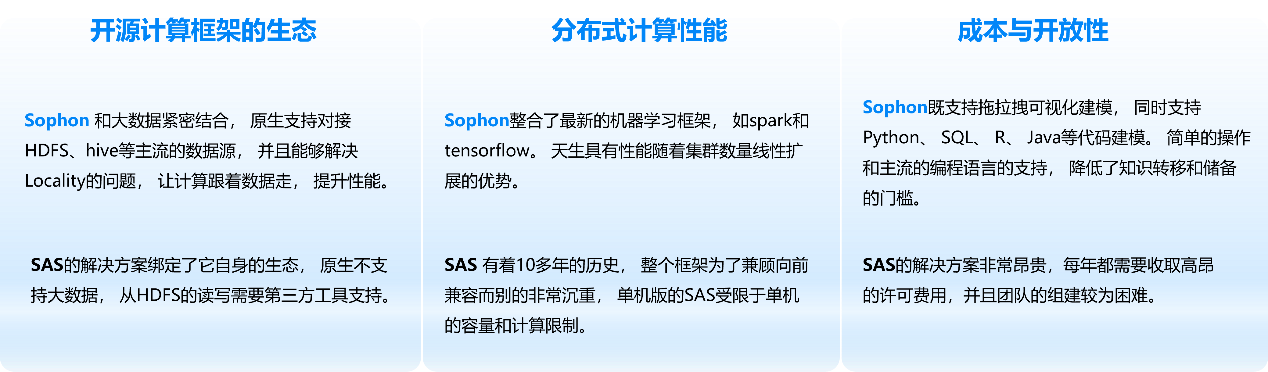

One or a few servers bear all the computing tasks of traditional centralized analysis software. When the amount of data increases to the TB or even PB level, server hardware configurations such as memory and CPU will soon become bottlenecks. This not only results in slow data processing, but also may incur extremely high costs due to frequent hardware upgrades.

Such tools often adopt a closed architecture design and are extremely difficult to achieve effective integration with emerging big data platforms and cloud computing environments. They lack support for distributed storage and computing, and cannot fully utilize the parallel processing capabilities of the cluster. They are incompetent when processing massive data and complex machine learning tasks.

Performance advantages of distributed architecture

The core of domestic intelligent analysis tools is a distributed architecture. It distributes data and computing tasks to hundreds or thousands of ordinary servers to work together, achieving linear expansion of processing capabilities. This method breaks through the limitations of single-machine hardware and can calmly cope with the continued growth of data scale.

In actual application scenarios, such a distributed architecture has been proven to greatly improve data processing efficiency. For example, the time it takes to process hundreds of millions of customer transactions is shortened from hours to minutes. It can make real-time business decisions possible, which is demonstrated in the practice of a large financial institution.

Methods and steps for smooth migration

Switching from foreign tools to domestic platforms is not a simple software replacement, but a systematic project. A mature migration method generally includes multiple stages, including sorting out existing business logic, understanding and converting historical data, and parsing and reconstructing the original analysis model.

During specific operations, enterprises first need to have a deep understanding of the business objectives and data foundation served by the existing analysis model. Subsequently, the technical team will sort out and design the code logic of the original model, and prepare data that meets the requirements of the new platform. Finally, after verification, the migration and online application will be completed.

Comprehensive coverage of functions and capabilities

The analysis platform with domestic attributes achieves comprehensive coverage of modeling capabilities with the help of a large number of built-in high-performance distributed algorithm components. These components can achieve the same statistical analysis and machine learning functions as traditional tools, achieving continuity and consistency of business analysis.

What's more, new platforms often offer advanced features not found in traditional tools. For example, model interpretability tools can help business personnel understand the decision-making basis of complex models, and federated learning technology can achieve cross-organizational data collaboration and joint modeling while ensuring data privacy.

Expanded high-end application scenarios

There is a complete domestic solution. In addition to the core data science platform, it has also expanded to more cutting-edge fields. Among them, the edge computing platform can deploy analysis capabilities on devices at the edge of the network and process the data directly at the source of data generation to meet the real-time response requirements of scenarios such as the Industrial Internet of Things.

The privacy computing platform guarantees the safe circulation and collaboration of data. It allows many participating groups to perform joint analysis without exposing the original data, effectively solving the problem of data silos and providing a technical foundation for scenarios such as financial risk control and medical research that require data fusion.

Business value brought by practical applications

In the practice of a large state-owned bank, after replacing the original tools, the bank was able to use all customer data to conduct in-depth mining. Based on the new distributed platform, they built a customer churn early warning model to accurately identify potential churners among high-value customers, providing a key basis for formulating retention strategies.

The value brought by this migration is not only an improvement in the technical level, but also lowering the threshold for use by business personnel. Models can be built with the help of visual drag and drop operations, and at the same time, there is unified model management and monitoring. The function makes the entire life cycle from model input and development to post-deployment application more efficient and controllable, ultimately helping enterprises achieve digital transformation.

In actual work, has your company encountered the bottleneck of traditional analysis tools, and how do you consider and plan replacement options? You are welcome to share your experiences and opinions in the comment area. If you find this article helpful, please like it and support it.

![[Bilingual Financial News] Expanding Domestic Demand Will Become The Focus Of Economic Work In 2024](https://www.bqno.com/wp-content/uploads/2025/12/1766167415609_0.jpg)